Discover 3D Objects From Embodied Visual Data

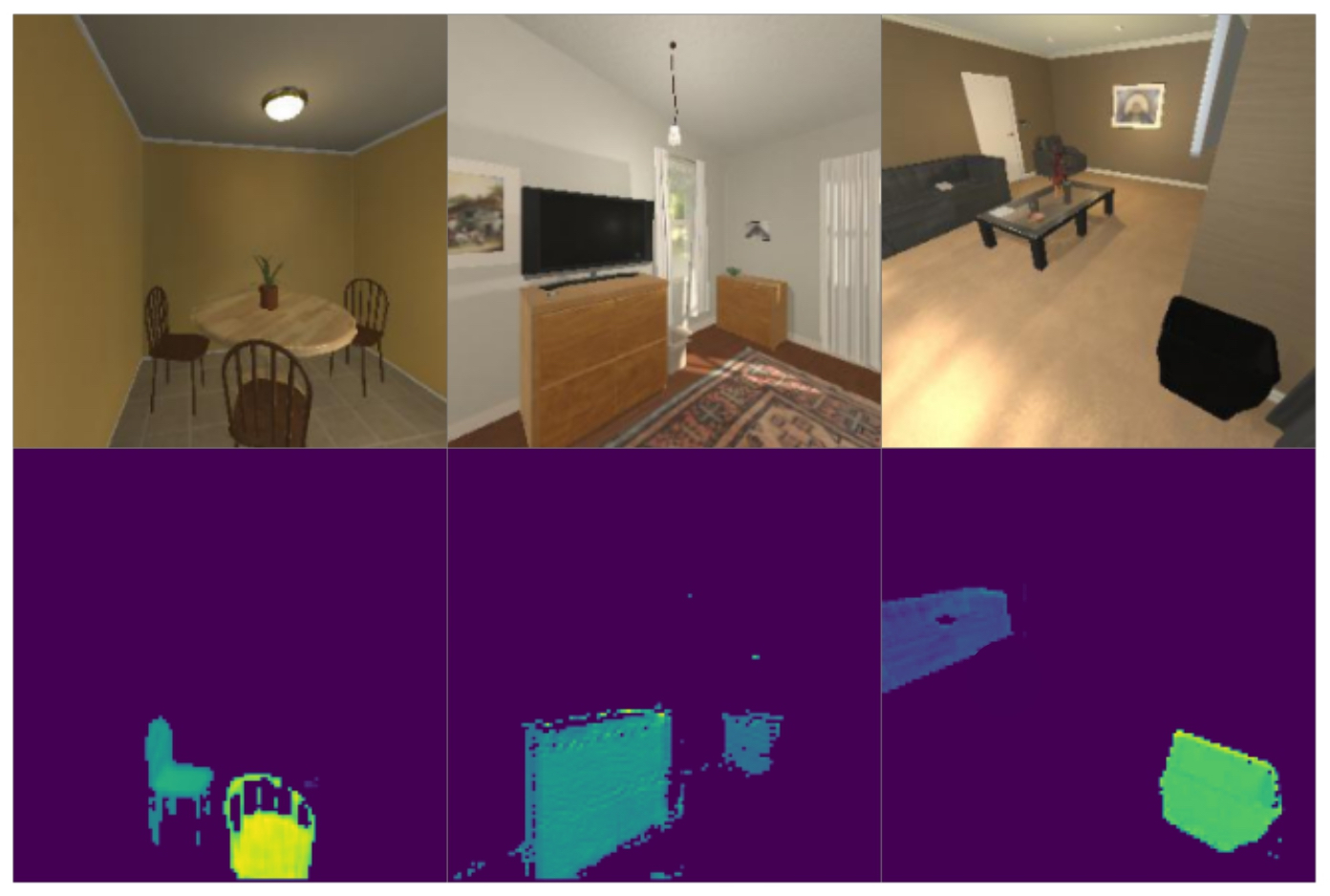

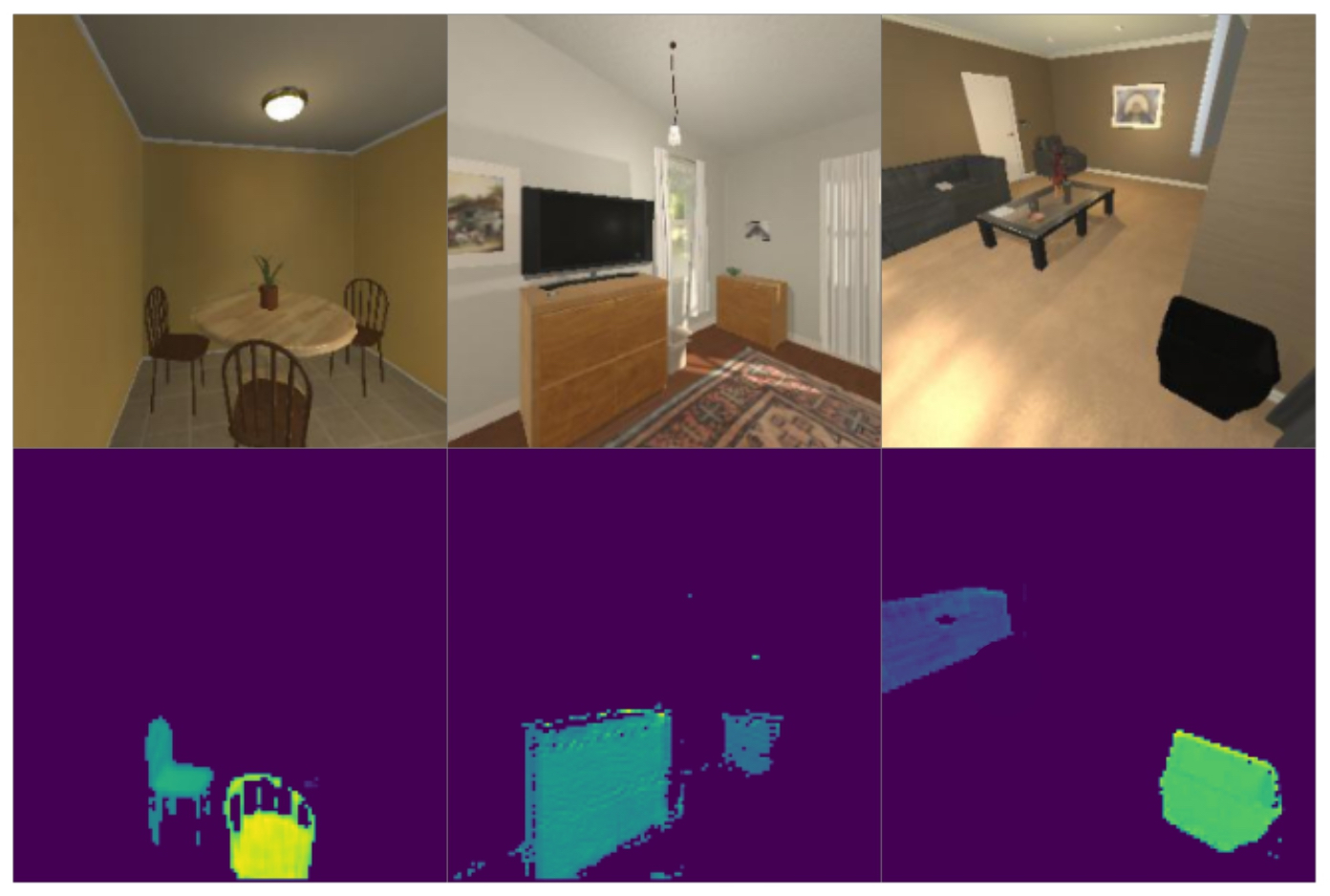

Infer Object Depths Without Supervision

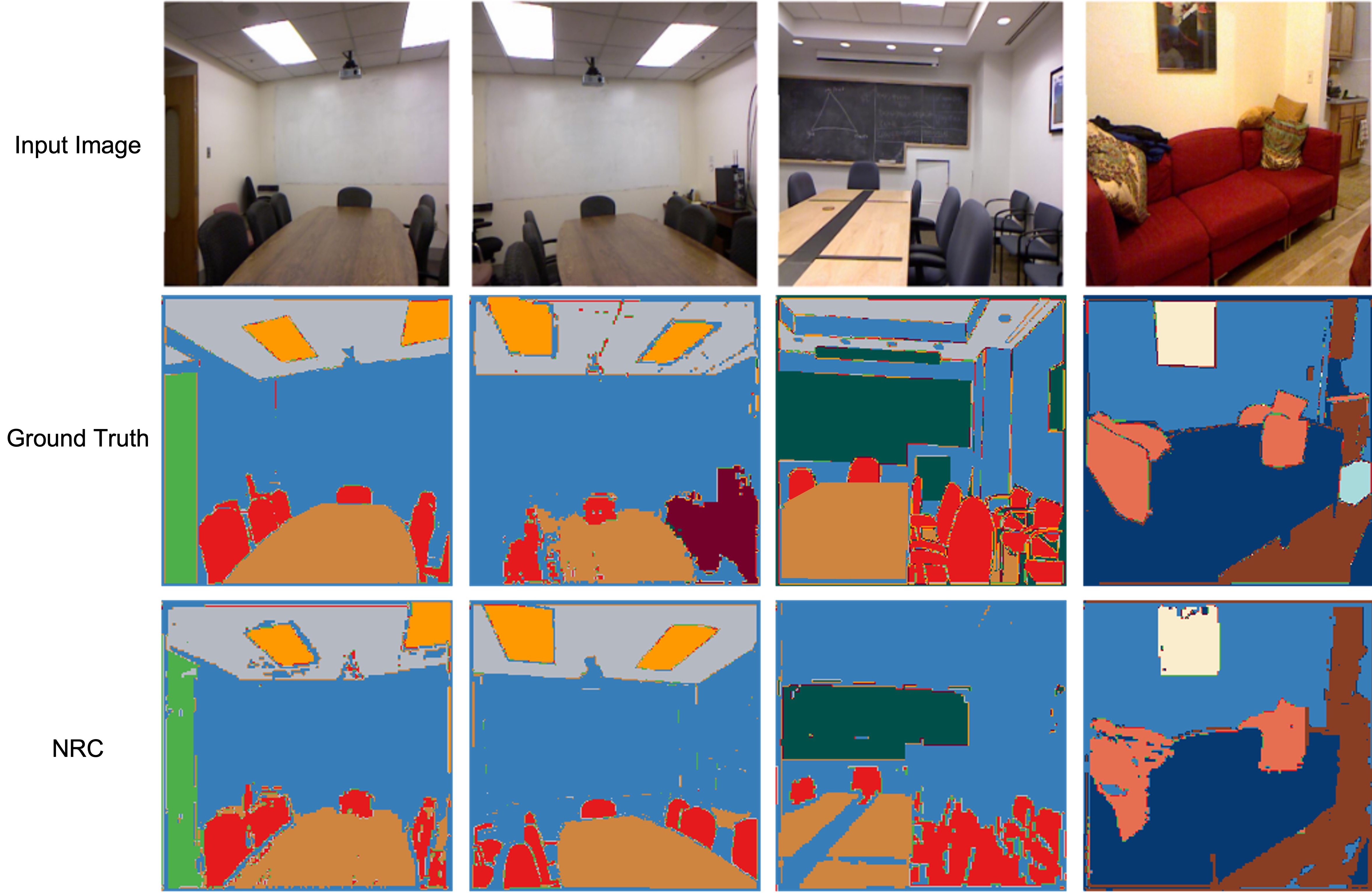

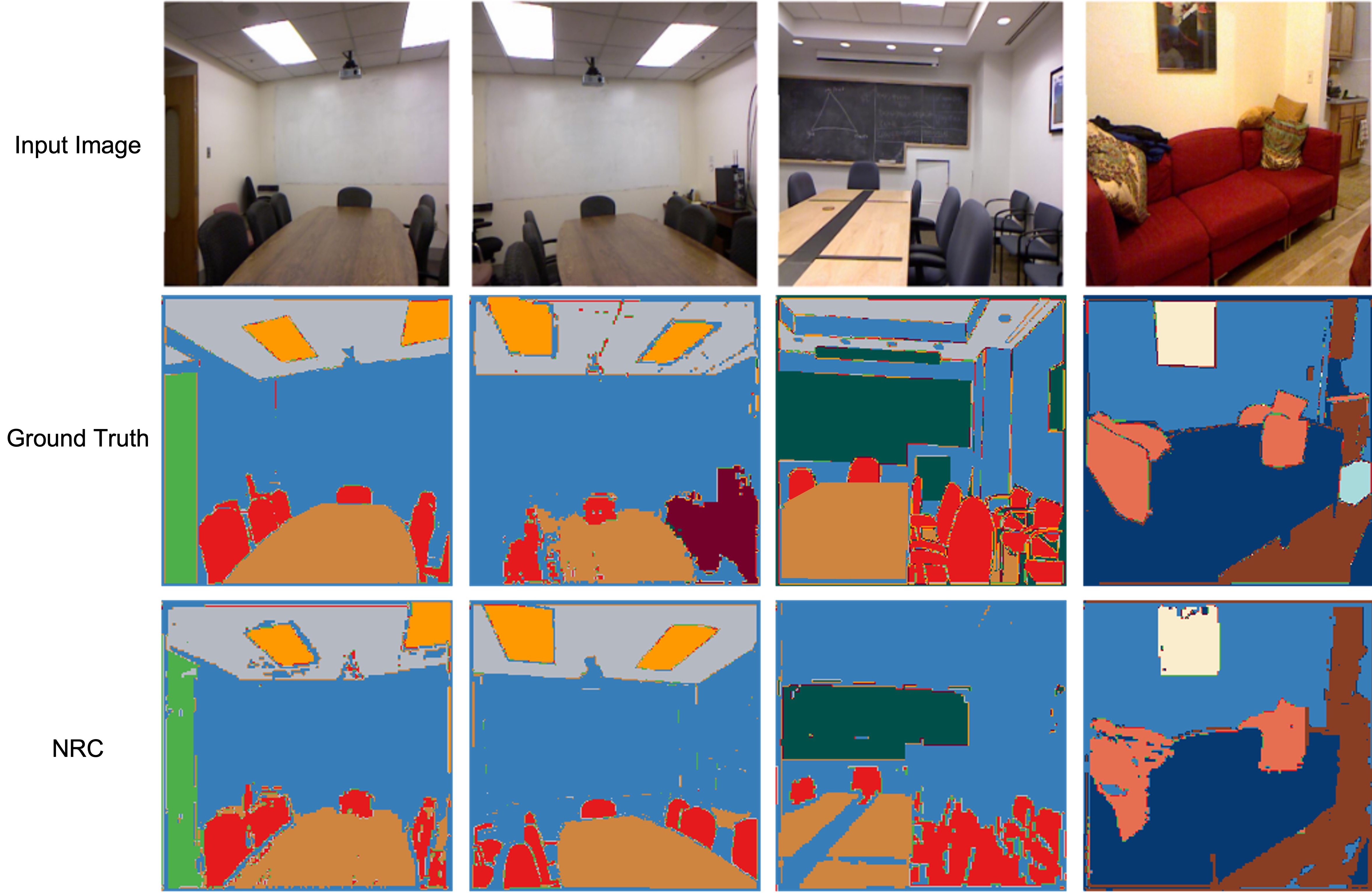

Segment Discovered Real-World Objects

Compositional representations of the world are a promising step towards enabling high-level scene understanding and efficient transfer to downstream tasks. Learning such representations for complex scenes and tasks remains an open challenge. Towards this goal, we introduce Neural Radiance Field Codebooks (NRC), a scalable method for learning object-centric representations through novel view reconstruction. NRC learns to reconstruct scenes from novel views using a dictionary of object codes which are decoded through a volumetric renderer. This enables the discovery of reoccurring visual and geometric patterns across scenes which are transferable to downstream tasks. We show that NRC representations transfer well to object navigation in THOR, outperforming 2D and 3D representation learning methods by 3.1% success rate. We demonstrate that our approach is able to perform unsupervised segmentation for more complex synthetic (THOR) and real scenes (NYU Depth) better than prior methods (29% relative improvement). Finally, we show that NRC improves on the task of depth ordering by 5.5% accuracy in THOR.

@article{wallingford2023neural,

author = {Wallingford, Matthew and Kusupati, Aditya and Fang, Alex and Ramanujan, Vivek and Kembhavi, Aniruddha and Mottaghi, Roozbeh and Farhadi, Ali},

title = {Neural Radiance Field Codebooks},

journal = {International Conference on Learning Representations},

year = {2023},

}